-

Local First

-

AI belongs inside your product, close to the data, decisions, and users it supports — not halfway around the world.

-

Invisible by Design

-

Our runtime integrates quietly — no infrastructure overhauls, no deployment friction, no unexpected interference.

-

System-Aware

-

We play well with others. Our engine respects system priorities and runs in harmony with critical processes.

-

Your Models, Your Rules

-

We don't own your logic — you do. You bring the model, we make it run where and how you need it.

-

Built for Constraint

-

We perform where others fail: in tight memory, low power, disconnected, or time-sensitive environments.

-

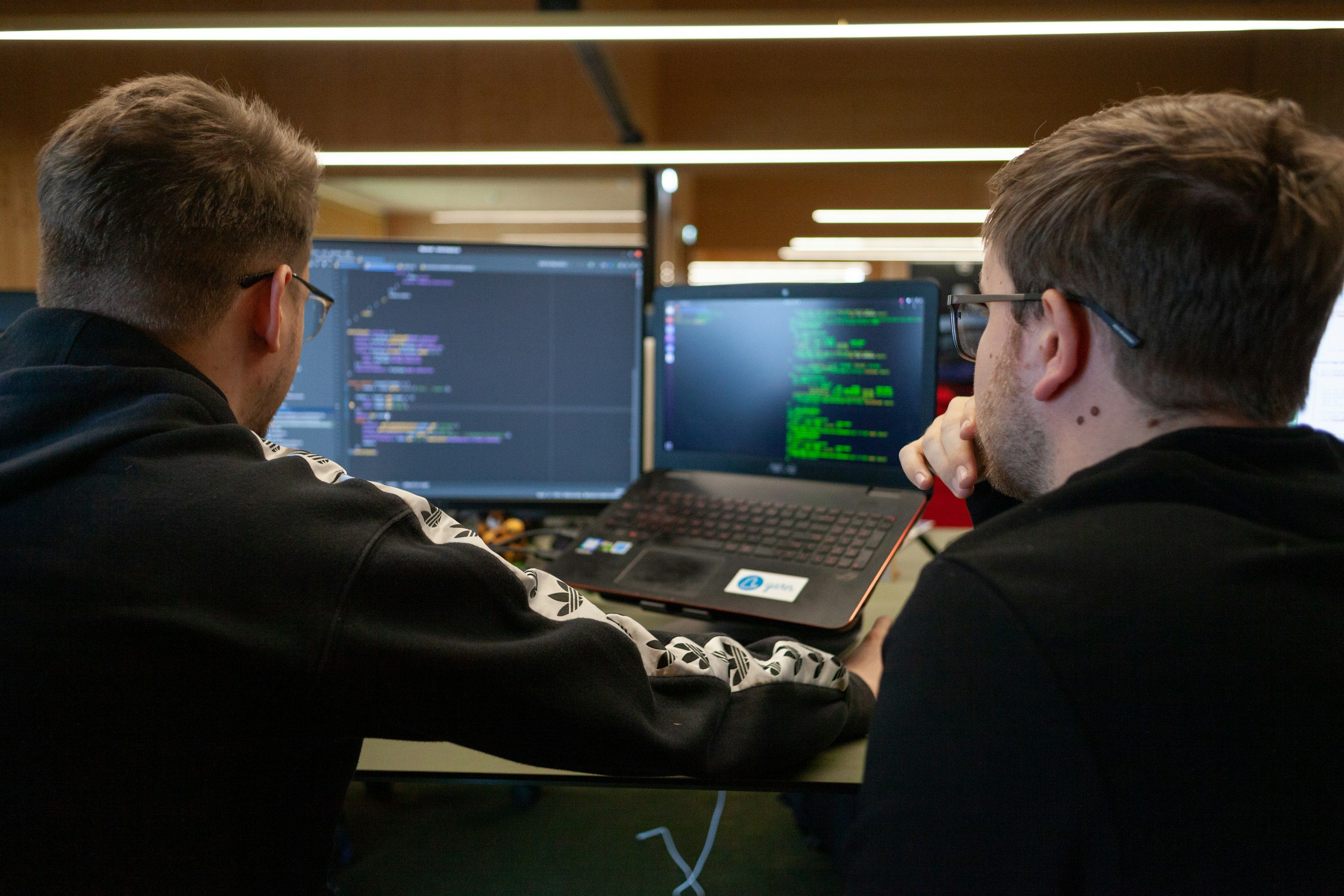

Made for Builders

-

We support the teams designing what’s next — with the flexibility, safety, and tools to get there faster.