On-Device Model Architecture: Where GPT-OSS Fits in the Edge AI Landscape

Engineering

Edge devices have limited memory footprints, making Mixture of Experts (MoE) models with active parameter selection the optimal solution for deploying sophisticated AI reasoning locally.

Active vs. Passive Parameters: The MoE Advantage

In addition to enabling selective activation, MoE models significantly reduce memory bandwidth pressure. By only activating a small subset of parameters per token (e.g., 3.6B in GPT-OSS 20B), memory traffic drops dramatically compared to dense models that require loading the full parameter set for each inference step.

However, MoE still demands a considerable total model footprint. While the active set is small, the full parameter pool (21B to 30B) must remain in memory. This makes ultra-light devices with limited RAM less suitable for large MoE models. In practice, 15B to 30B models represent a sweet spot. For example, 30B at Q4 (4-bit) quantization is roughly 16GB, which aligns with memory available on high-end edge devices and premium AI PCs.

More detail on models

Performance Implications for CPU+iGPU Systems

On shared memory architectures (typical in modern laptops and edge systems), inference is often bottlenecked by memory bandwidth, not compute. This applies to systems where CPU, iGPU, and NPU share the same memory bus. While smaller models may hit compute limits, larger models—especially those in MoE format—are increasingly memory-bound.

Importantly:

-

Most future devices are transitioning to shared memory designs to streamline memory access across heterogeneous compute units.

-

This makes MoE models particularly advantageous since the working set (active parameters) remains small and can fit within bandwidth-constrained environments.

-

Speculative decoding for MoE models may help even further by pre-loading likely expert paths, improving utilization of both memory and compute without overloading the bandwidth.

-

A 3B active parameter model like GPT-OSS performs well on these shared systems, offering a strong throughput-to-cost ratio.

Modern CPU+iGPU combinations can effectively handle MoE inference, with systems requiring only 16GB of memory compared to 80GB+ for traditional large models. This enables deployment on mainstream hardware while maintaining sub-second response times.

CPU+iGPU Advantage Over Discrete GPUs: MoE models particularly benefit from CPU+iGPU configurations with unified memory architectures because they can leverage the full system memory pool (32GB-128GB+) rather than being constrained by discrete GPU memory limits (typically 8-24GB). While discrete GPUs offer higher computational throughput, MoE models' moderate active parameter counts mean the performance bottleneck shifts from compute to memory capacity. The abundant system RAM accessible through CPU+iGPU setups provides the headroom needed for the full model while integrated graphics deliver sufficient processing power for the active parameter subset. Performance typically matches dense models with parameter counts equivalent to the MoE model's active parameters—meaning GPT-OSS 20B performs similarly to a 3.6B dense model, while Qwen3-30B-A3B matches 3B dense model performance, but with access to the full knowledge base of their larger parameter pools.

Router Intelligence and Quantization Benefits

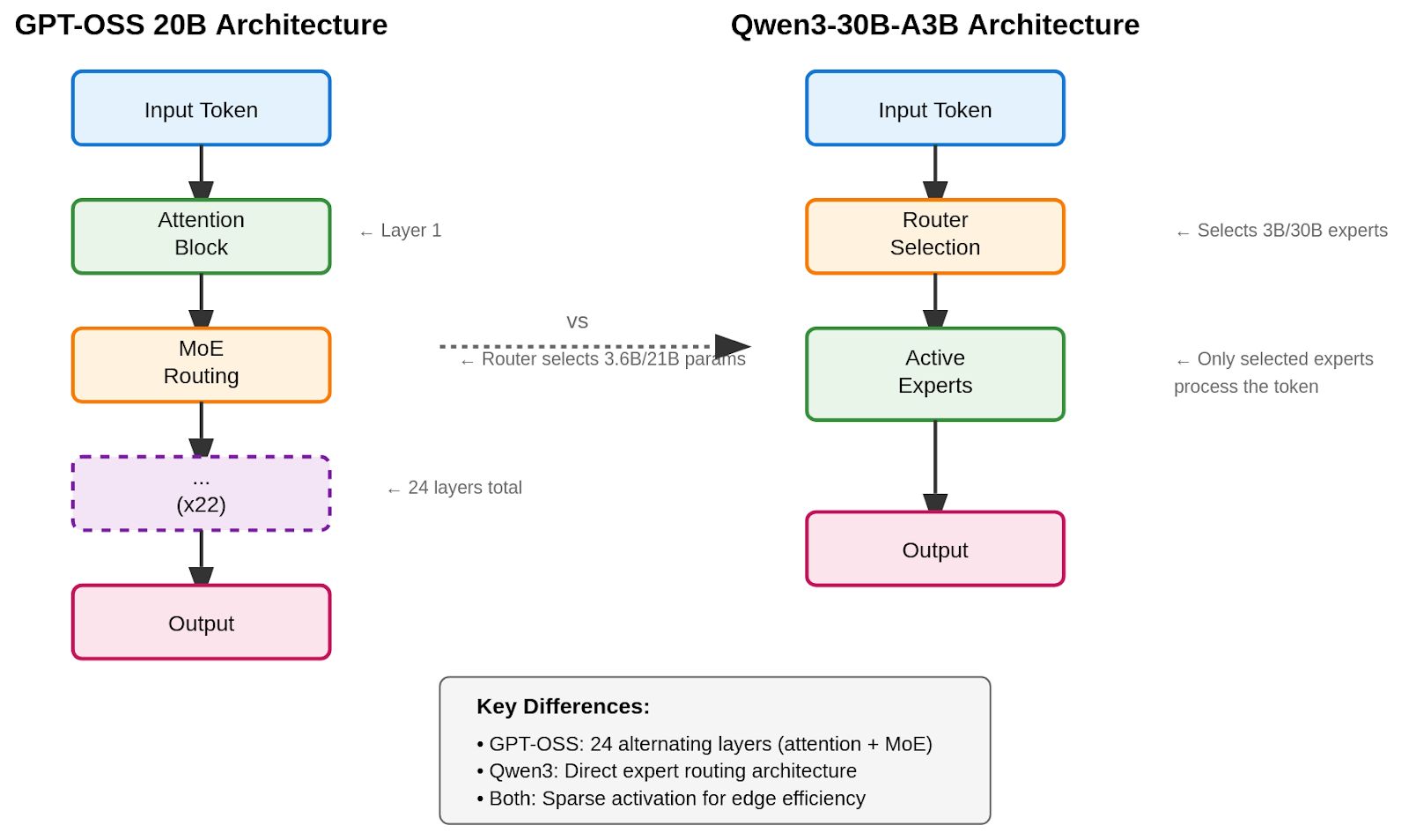

The routing mechanism in MoE models employs context-aware expert selection, ensuring optimal resource allocation per token. Each model implements distinct router architectures:

GPT-OSS 20B Router: Uses a 24-layer Transformer architecture where each layer alternates between attention blocks and MoE blocks, activating 3.6B out of 21B total parameters per token.

Qwen3-30B-A3B Router: Employs an advanced MoE architecture that activates only 3B out of 30B parameters while maintaining performance comparable to much larger dense models.

Different routing strategies impact device compatibility and performance:

-

GPT-OSS 20B uses a simpler, alternating attention-MoE block structure with modest expert routing logic. This provides predictable activation paths, which simplifies memory prefetching and lowers latency variance — useful for constrained or real-time systems.

-

Qwen3-30B-A3B uses more sophisticated routing and achieves higher parameter efficiency, but at the cost of more dynamic compute paths. This may introduce variability in latency and power draw, which can be a concern for mobile or thermally-constrained environments.

From a device perspective, simpler routing favors predictability and deterministic memory patterns, while more dynamic routing can maximize performance but requires tighter scheduling and runtime coordination.

On the quantization front:

-

GPT-OSS adopts 4-bit MXFP4, achieving 75% memory savings with minimal accuracy loss. This enables deployment on devices with 16GB or less system memory.

-

For on-device deployment, 4-bit quantization is essential to hit target memory and thermal envelopes, and also helps keep inference throughput acceptable across CPU+iGPU setups.

Comparative Analysis: Two MoE Approaches

Both models demonstrate different paths to edge optimization:

GPT-OSS 20B emphasizes:

- Aggressive quantization with transparent reasoning processes

- Configurable effort levels for different use cases

- Predictable memory usage patterns

Qwen3-30B-A3B focuses on:

- Superior STEM and coding performance

- Exceptional parameter efficiency (outperforming models with 10x more active parameters)

- Optimized sparse activation patterns

The Edge AI Revolution

MoE models represent a paradigm shift from centralized cloud inference to distributed edge intelligence. The combination of selective parameter activation, intelligent routing, and efficient quantization enables:

- Cost Reduction: Elimination of expensive discrete GPU requirements

- Latency Improvement: Local processing without network dependencies

- Privacy Enhancement: Sensitive data processing remains on-device

- Scalability: Horizontal deployment across multiple edge devices

The future of AI inference lies in these efficient architectures that deliver sophisticated reasoning capabilities while operating within the practical constraints of edge hardware. MoE models have transformed the question from "Can we run advanced AI at the edge?" to "How do we optimize deployment for specific use cases?"

As MoE models become the standard for edge AI deployment, specialized inferencing solutions are essential to unlock their full potential. Our AI inferencing platform is purpose-built for this MoE-driven future, addressing the unique performance challenges of sparse activation patterns, dynamic routing optimization, and efficient memory management across diverse edge hardware configurations. If you're looking to deploy MoE models at scale while overcoming the complexities of quantization strategies, hardware compatibility, and inference optimization, we provide the comprehensive solution that transforms cutting-edge MoE research into production-ready edge AI systems.

Ready to Get Started?

OpenInfer is now available! Sign up today to gain access and experience these performance gains for yourself.