Boosting Local Inference with Speculative Decoding

Engineering

In our recent posts, we’ve explored how CPUs deliver impressive results for local LLM inference, even rivaling GPUs, especially when LLMs push on hardware's memory bandwidth limits. These bandwidth limits are a hurdle towards great local AI, especially as workflows incorporate multiple models and components that apply memory pressure. Quantization, which reduces model weight precision to ease memory pressure, sacrifices accuracy—problematic for precision-sensitive tasks like tool calling. Enter speculative decoding, an industry-standard technique that mitigates memory bandwidth bottlenecks while preserving quality. Today we’ll explore how speculative decoding accelerates single-model workflows on local clients.

The Memory Pressure Equation

Speculative decoding accelerates inference on memory bandwidth-bound systems using two models: a target model (the primary, high-quality LLM) and a draft model (a smaller, faster model). Although it may seem counterintuitive, running two models can be faster than one due to batching.

In standard autoregressive decoding, LLMs generate one token at a time, each requiring the model’s weights to be read from RAM. For an 8B, 8-bit parameter model, this means transferring 8GB per token. On a system with 128GB/s memory bandwidth, this limits throughput to ~16 tokens per second, excluding other memory demands like KV-cache. Speculative decoding batches multiple tokens in one iteration, reading weights once to generate several tokens. Assuming a batch of 8 tokens for the same target model, this could theoretically boost throughput to ~128 tokens per second.* Draft model predictions and context rewinding reduce this gain, yet speculative decoding still significantly improves efficiency on memory-bound systems.

Real-World Performance

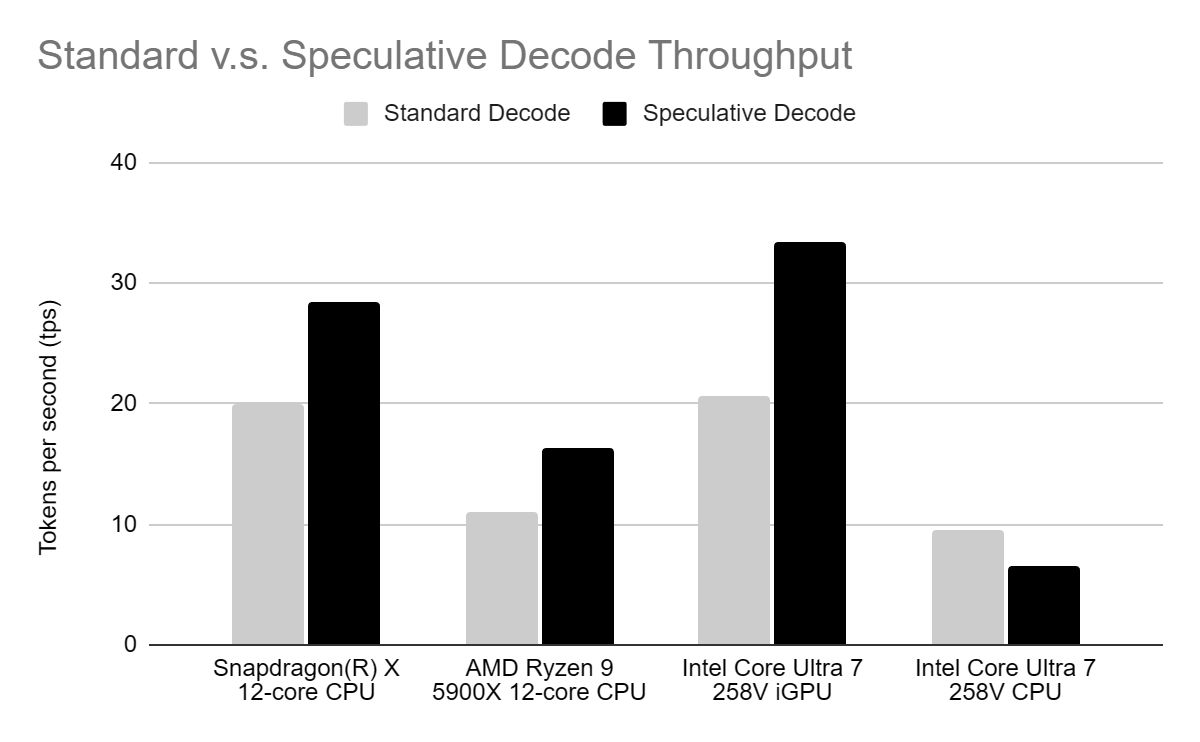

Our tests show that speculative decoding delivers significant throughput gains with minimal quality loss on memory-bound systems. The chart below compares standard decoding (Llama-3.1-8B, 4-bit quantization) to speculative decoding (with Llama-3.2-1B, 4-bit quantization as the draft model):

Speculative decoding significantly improves throughput on most tested compute units, improving user experience. It also reduces power consumption by shortening compute unit activity and memory controller workload. However, on the Intel Core Ultra 7 CPU, we observed a throughput decrease, indicating a compute bottleneck, while its iGPU showed significant gains due to memory bandwidth limitations. This discrepancy highlights that compute units can fall into two categories:

- Memory Bound: which benefit from speculative decoding due to its impact on easing memory bandwidth

- Compute Bound: which perform worse performance with speculative decode due to the increased compute requirements

Considerations for Local Inference

As illustrated by its real-world performance, while speculative decoding excels at reducing memory bandwidth, it introduces tradeoffs:

- Extra Memory Usage: The draft model’s weights, KV-cache, and context increase memory demands, challenging devices with limited resources.

- Compute Overhead: The draft model’s predictions and context rewinding raise compute requirements, slowing inference on compute-bound systems.

Effective implementation for local inference requires unique approaches:

- Graceful Degradation: If the local machine has limited compute or memory, or when it’s under pressure from other processes, the inference engine should gracefully degrade to traditional decoding to free up system resources.

- Hybrid Compute: On client machines that have other compute units (e.g. dedicated GPU, NPU) the inference engine should intelligently load balance compute and memory to extract even greater perf wins. Draft Model Selection: Choosing a draft model that accurately predicts the target model’s output tokens is critical. If an appropriate smaller model isn’t available, distillation is necessary. Balancing quantization levels for speed, size, and accuracy adds complexity. For local inference, smaller draft models unlock more memory for other systems.

- Parameter Tuning: Settings like the maximum number of predicted tokens and token acceptance probability thresholds impact performance. Over-accepting low-probability tokens can increase discrepancies, requiring optimization for each model pair.

No one-size-fits-all solution exists for local inference with speculative decoding, as performance depends on diverse factors like compute unit types, memory capacity, and bandwidth. Tailoring strategies to specific hardware configurations and developing a runtime system to efficiently manage resources under high demand are essential to fully maximize performance gains.

Looking Forward

As local AI solutions begin to incorporate complex workflows with multiple LLMs and components that all fight for system resources, techniques like speculative decoding become critical to achieve good performance and user experience. Handling complexities such as selecting optimal draft and target models, tuning quantization, and configuring parameters are critical to ensure peak performance on your target hardware.

In future posts, we’ll dive deeper into local inference bottlenecks and explore how techniques like speculative decoding and hybrid compute across CPU, GPU, and NPU can accelerate multi-model workflows, delivering exceptional local AI agents.

*Our theoretical throughput calculations are simplified for clarity. In practice, each iteration of an LLM requires reading far more memory from RAM beyond a model's weights (e.g. scheduling overheads, KV-cache, context, sampling, etc). Therefore, accurate estimates of max throughput for traditional and speculative decode will be lower than our examples in this post.

Ready to Get Started?

OpenInfer is now available! Sign up today to gain access and experience these performance gains for yourself.