Unlocking the Full Potential of GPUs for AI Inference

Engineering

GPUs are a cornerstone of modern AI workloads, driving both large-scale model training and real-time inference applications. However, achieving full utilization of these powerful accelerators remains a persistent challenge. Despite their immense theoretical throughput, many AI workloads fail to extract maximum performance due to a combination of architectural, software, and workload-related factors.

While GPUs are designed for parallelism, inefficiencies often arise when workloads do not align perfectly with the hardware's underlying execution model. In fact, many modern large language models (LLMs) operate at only a fraction of their theoretical peak efficiency. For example, benchmarks have shown that GPU utilization in transformer-based architectures often hovers around 30-50%, with even high-end accelerators struggling to keep all processing cores engaged. That means in many cases more than half of the hardware's potential is going unused! This discrepancy stems from various bottlenecks, including memory bandwidth challenges, core occupancy, and precision mismatches—three key challenges that significantly impact AI inference efficiency.

Memory Bandwidth Challenges and Performance Impact

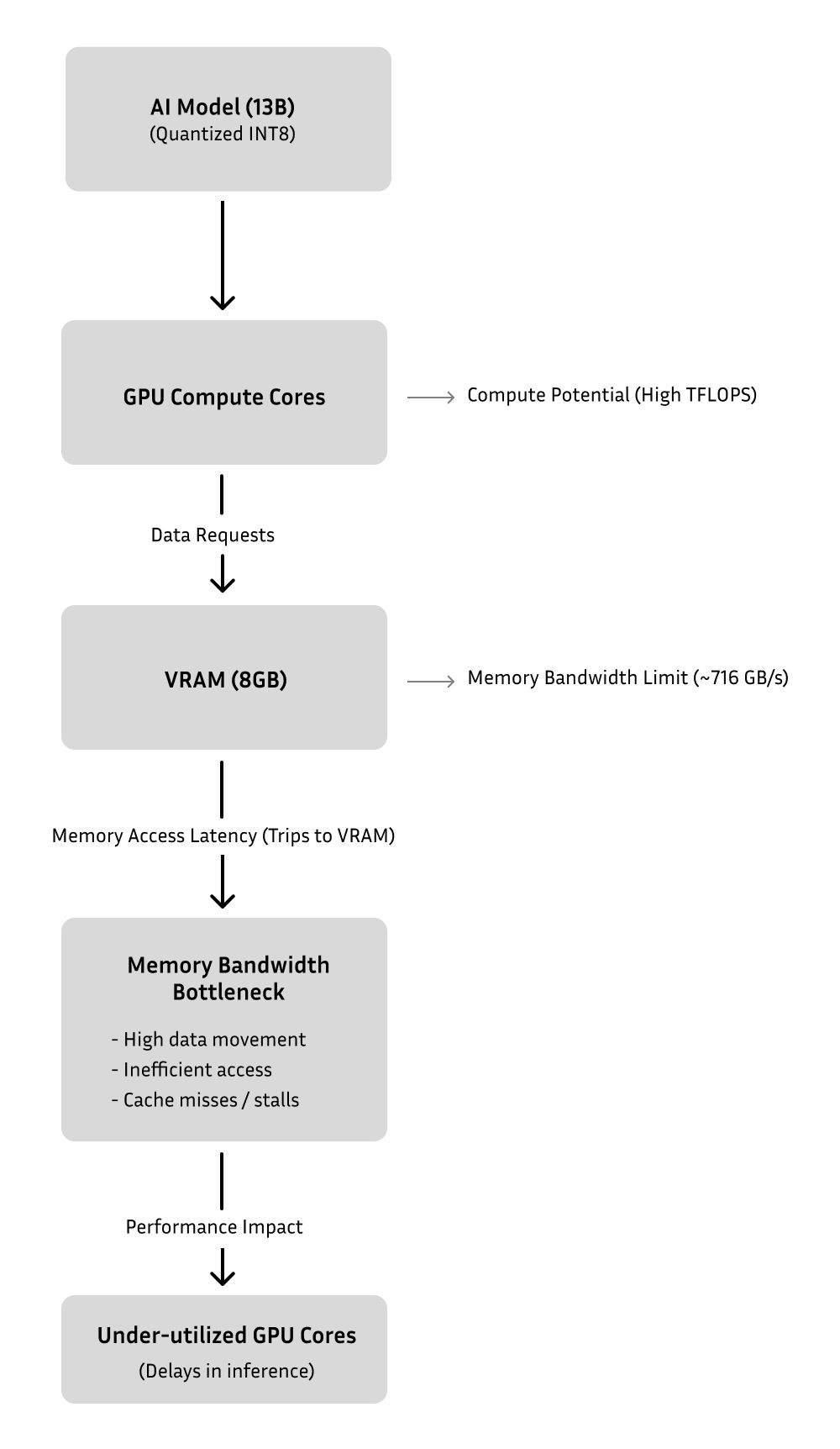

AI models, particularly those with large parameter sizes, often struggle with memory-bound operations where data movement limits execution speed more than compute capacity. This is especially true for transformer-based models, where attention layers and large matrix multiplications lead to excessive memory access.

Consider just a small 13-billion parameter model, such as LLaMA-2 13B, quantized to 8bits for deployment on a consumer GPU with 8GB of VRAM. Even at this scale, each forward pass involves gigabytes of memory movement, which can quickly saturate GPU memory bandwidth. For instance, running inference on an NVIDIA RTX 4080, which has a memory bandwidth of approximately 716GB/s, still results in significant delays if data access patterns are not optimized. Despite its high-speed GDDR6X memory, inefficient data movement and cache utilization can lead to stalls that prevent the GPU from reaching its full computational potential. This forces frequent trips to VRAM, where memory bandwidth can become a bottleneck, particularly when layers are not efficiently scheduled. Furthermore, various quantization formats, while effective for reducing model size and memory footprint, can introduce inefficiencies in hardware access. Lower-precision formats like INT8 and BF16 compress data well but may require additional dequantization steps or lead to suboptimal memory alignment, increasing latency. Additionally, tensor cores are optimized for specific precision formats, meaning that mixed-precision models must carefully manage conversions to avoid unnecessary overhead.

Core Occupancy and GPU Utilization: Bottlenecks and Metrics

One of the most important factors in GPU efficiency is core occupancy, which refers to the percentage of available processing resources that are actively executing work at any given time. High core occupancy means that most of the GPU's streaming multiprocessors (SMs) are engaged in useful computation, whereas low core occupancy indicates inefficiencies where parts of the GPU remain idle.

Even when memory bandwidth is optimized, inefficient GPU core utilization can lead to performance bottlenecks. Achieving optimal performance requires keeping SM occupancy above 75-80%. However, real-world inference workloads often struggle to exceed 50%, leading to a significant loss of theoretical FLOPs. Ideally, AI workloads should maximize the occupancy of available SMs, ensuring all execution units are actively engaged. However, factors such as non-uniform tensor shapes, suboptimal scheduling, inefficient kernel execution, excessive register usage, branch mispredictions, and improper thread block sizing can all contribute to underutilization. High register usage can limit the number of active warps per streaming multiprocessor (SM), reducing overall parallelism. Similarly, branch divergence—where different threads within the same warp follow different execution paths—can lead to serialization of computations, reducing efficiency. Improperly configured thread blocks may also fail to fully utilize available compute resources, leaving execution units idle.

Sometimes, to achieve the absolute highest occupancy, you may need further control over scheduling, register allocation, and specific instructions emitted on a given device. In these cases, it may be necessary to bypass higher level APIs such as CUDA (or augment them) with lower level code such as writing PTX for NVIDIA hardware.

Precision Mismatches and Their Computational Overhead

Modern GPUs support mixed precision computation, allowing for a blend of FP32, FP16, and INT8 operations. However, incorrect handling of precision or excessive conversions can lead to unnecessary performance penalties.

For instance, an AI inference pipeline that frequently quantizes and dequantizes tensors between INT8, FP16, and FP32 throughout the execution path introduces unnecessary computational overhead and memory movement, leading to performance degradation. This issue is particularly problematic in high-throughput applications where such conversions happen multiple times per forward pass. Quantizing data to INT8 can significantly reduce model size and improve throughput, but if certain layers are not optimized for INT8 computation, the framework may dequantize back to FP16 or FP32, negating much of the efficiency gain. Each quantization and dequantization step requires additional compute and memory bandwidth, creating a bottleneck that can slow down inference. For example, tensor cores in NVIDIA GPUs are optimized for specific precision formats, and improper pipeline management can result in frequent precision transitions, effectively reducing overall GPU utilization.

What’s Next?

For AI developers, navigating these challenges is a daunting task. Optimizing for GPU efficiency requires deep knowledge of hardware behavior, software stack intricacies, and workload-specific tuning. Balancing all of these variables while still delivering a functional and scalable product is incredibly difficult, especially as models grow in complexity. If you are targeting client or edge, you also have a diversity of hardware your application may run on. Given these challenges, choosing an inference engine designed for peak efficiency—one that automatically optimizes for memory bandwidth, core occupancy, and precision mismatches—is crucial. OpenInfer is built from the ground up to tackle these inefficiencies, delivering an industry-leading solution that intelligently manages workloads, optimizes hardware utilization, and seamlessly integrates with modern AI pipelines to extract the best possible performance from GPUs. By leveraging OpenInfer, developers can focus on building and deploying AI applications without getting bogged down by the complexities of low-level performance tuning.

Stay tuned as we push the boundaries of AI performance and share more insights from our journey. If you’re passionate about solving complex performance challenges, we’d love to hear your thoughts!

Ready to Get Started?

OpenInfer is now available! Sign up today to gain access and experience these performance gains for yourself.