Introducing Performance Boosts in OpenInfer: 2-3x Faster Than Ollama/Llama.cpp

Engineering Team

At OpenInfer, we strive to redefine the boundaries of Edge AI performance. Our latest update demonstrates a 2-3x increase in tokens per second (tok/s) compared to Ollama/Llama.cpp. This boost was achieved on models like DeepSeek-R1 distilled to Qwen2 1.5B Q8_0, Qwen2 7B Q8_0, and Llama 8B Q8_0. Here’s how we delivered these gains and why it matters.

Benchmarking Setup

Our benchmarks were conducted on a high-performance laptop equipped with the AMD Ryzen 9 7845HX processor. Known for its exceptional multi-core capabilities, this processor provides an ideal environment for AI and development tasks.

Testing Environment

- Processor: AMD Ryzen 9 7845HX

- Models Tested:

- DeepSeek-R1 distilled to Qwen2 1.5B with Q8_0 quantization

- DeepSeek-R1 distilled to Qwen2 7B Q8_0

- DeepSeek-R1 distilled to Llama 8B Q8_0

- Runtime: OpenInfer (latest version) vs. Llama.cpp (latest version)

- Metric: Tokens per second (tok/s)

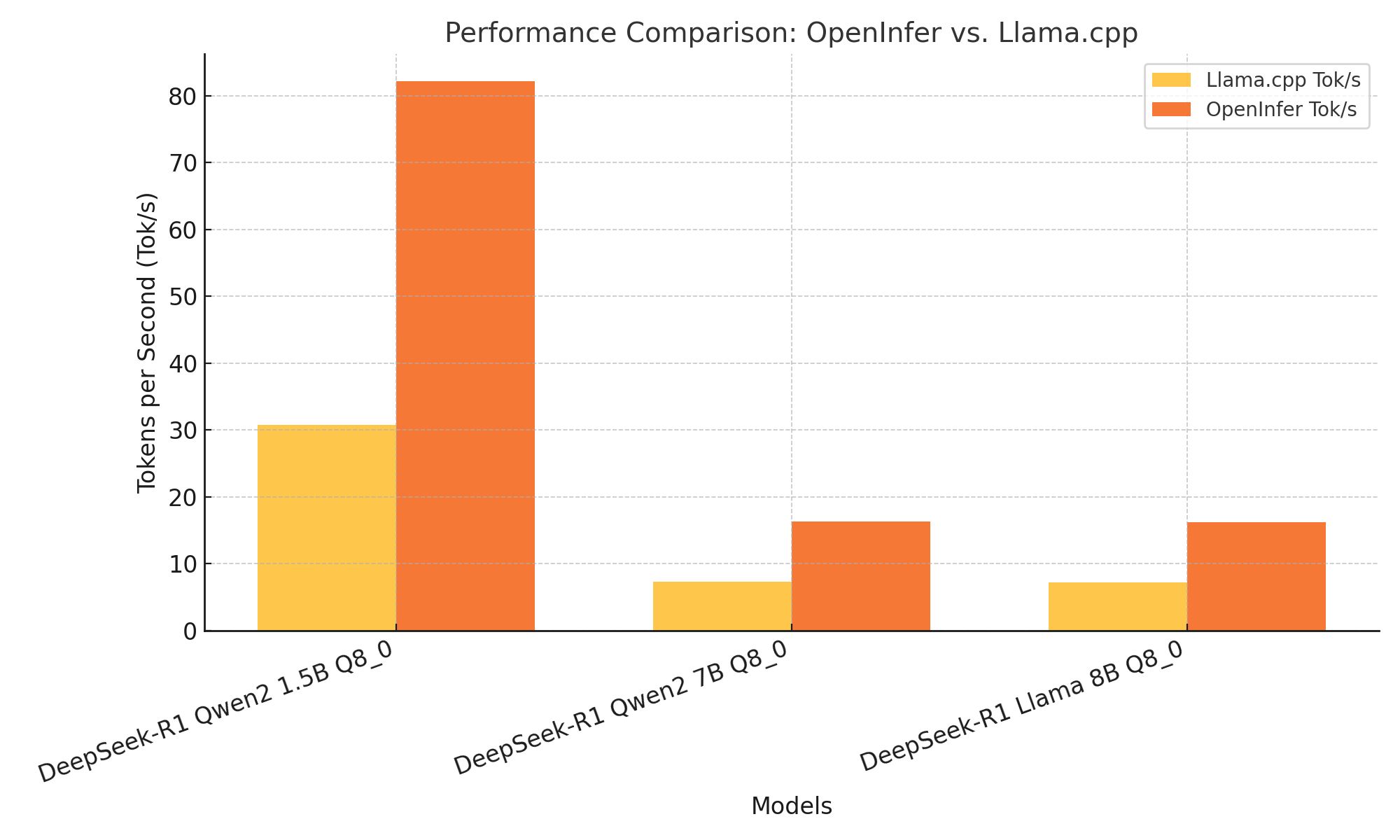

Performance Results

OpenInfer consistently outpaced Llama.cpp across all tested models. The table below highlights the improvements:

| Model | Llama.cpp Tok/s | OpenInfer Tok/s | Improvement |

|---|---|---|---|

| DeepSeek-R1 distilled Qwen2 1.5B Q8_0 | 30.75 tok/s | 82.19 tok/s | 2.673x |

| DeepSeek-R1 distilled Qwen2 7B Q8_0 | 7.35 tok/s | 16.3 tok/s | 2.218x |

| DeepSeek-R1 distilled Llama 8B Q8_0 | 7.22 tok/s | 16.27 tok/s | 2.253x |

Here are the results visualized in a chart:

Key Innovations Behind the Gains

Our performance enhancements stem from several targeted optimizations:

-

Efficient Handling of Quantized Values: By streamlining how quantized values are managed across the pipeline, we achieved smoother and faster processing.

-

Memory Access Optimization: Enhanced caching strategies and reduced memory overhead minimized delays in data retrieval and execution.

-

Model-specific Optimization: Tailoring the engine’s performance for each model architecture ensured peak efficiency without requiring any changes to the models themselves.

Why This Matters

These improvements bring significant benefits for AI developers:

- Real-time Applications: Faster inference times make real-time AI applications more responsive.

- Accelerated Development: Reduced latency enables quicker experimentation cycles.

- Edge AI Enablement: Advanced models can now run efficiently on edge devices, unlocking new possibilities for on-device intelligence.

What’s Next

This update is just the beginning. We’re already working on GPU optimizations that promise to take OpenInfer’s performance to the next level. Stay tuned for more updates!

Ready to Get Started?

OpenInfer is now available! Sign up today to gain access and experience these performance gains for yourself. Together, let’s redefine what’s possible with AI inference.

Ready to Get Started?

OpenInfer is now available! Sign up today to gain access and experience these performance gains for yourself.